An Introduction to GPU DataFrames for Pandas Users - Data Science of the Day - NVIDIA Developer Forums

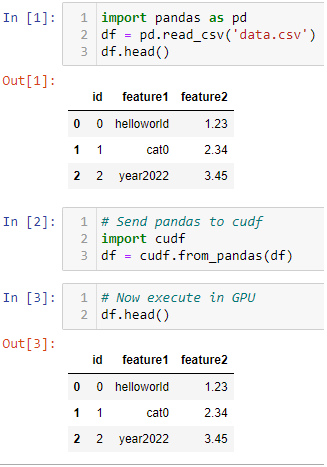

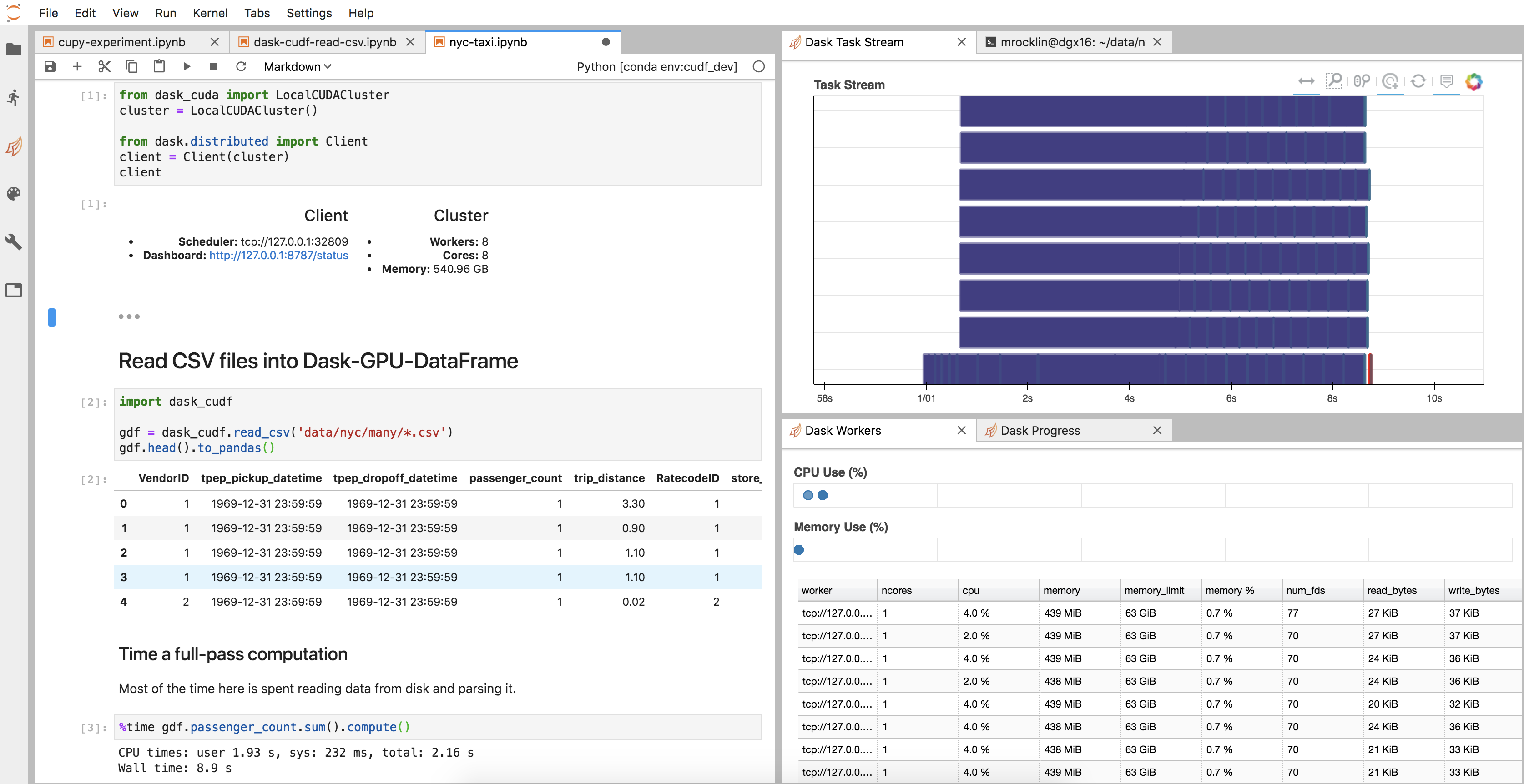

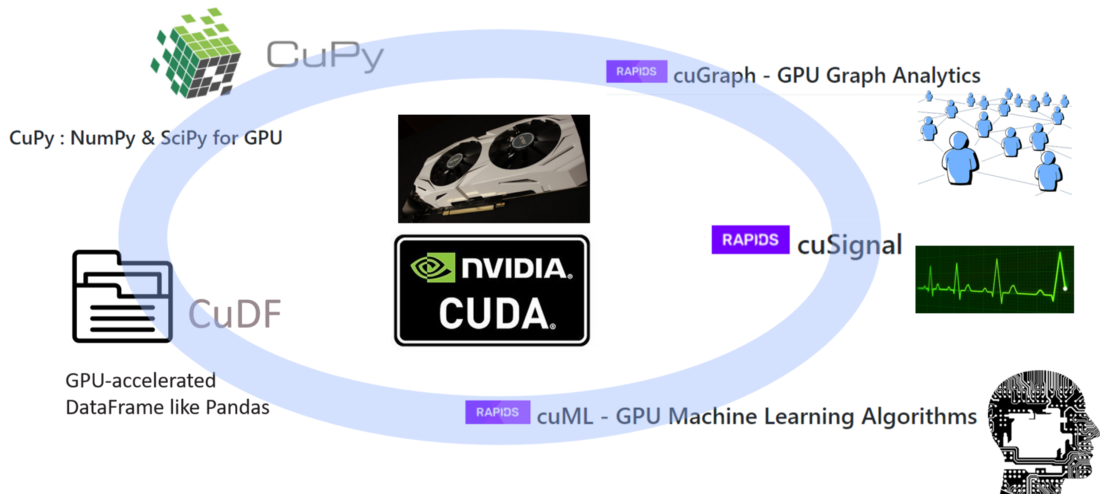

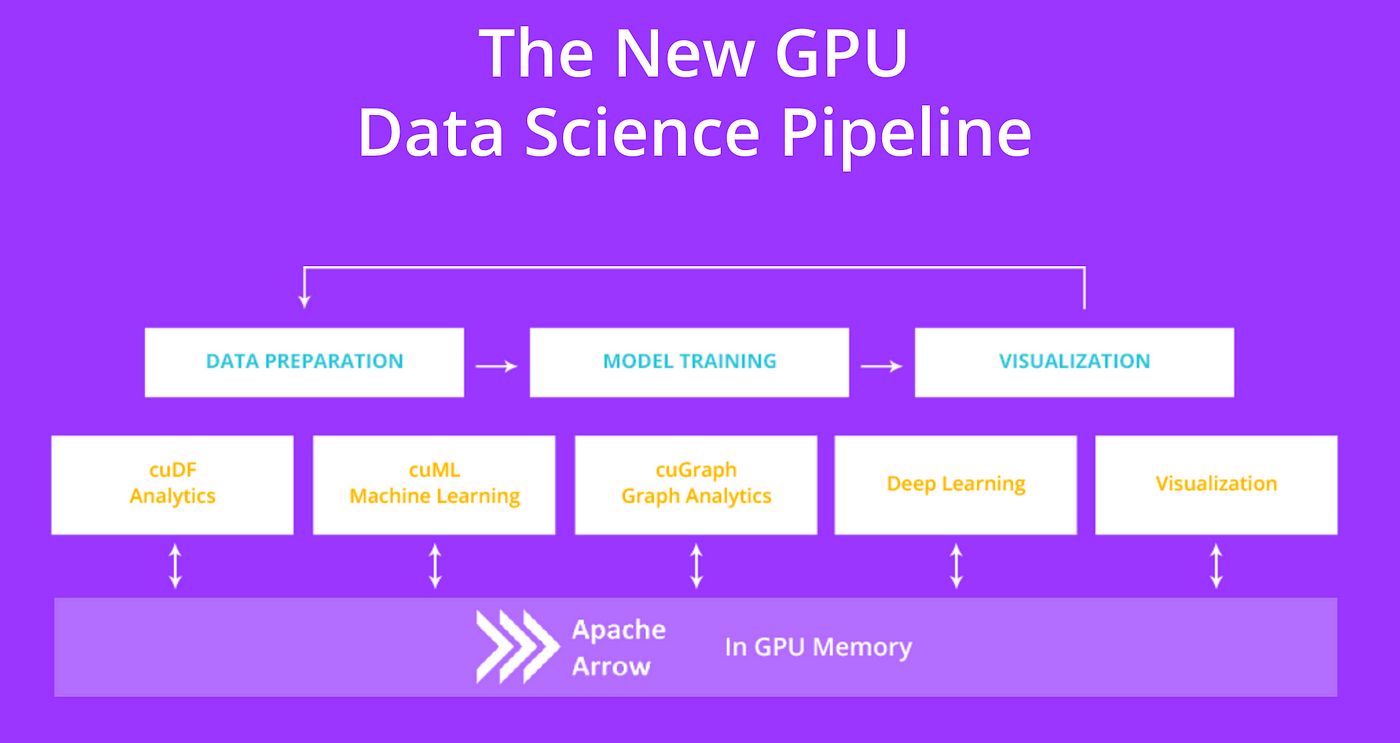

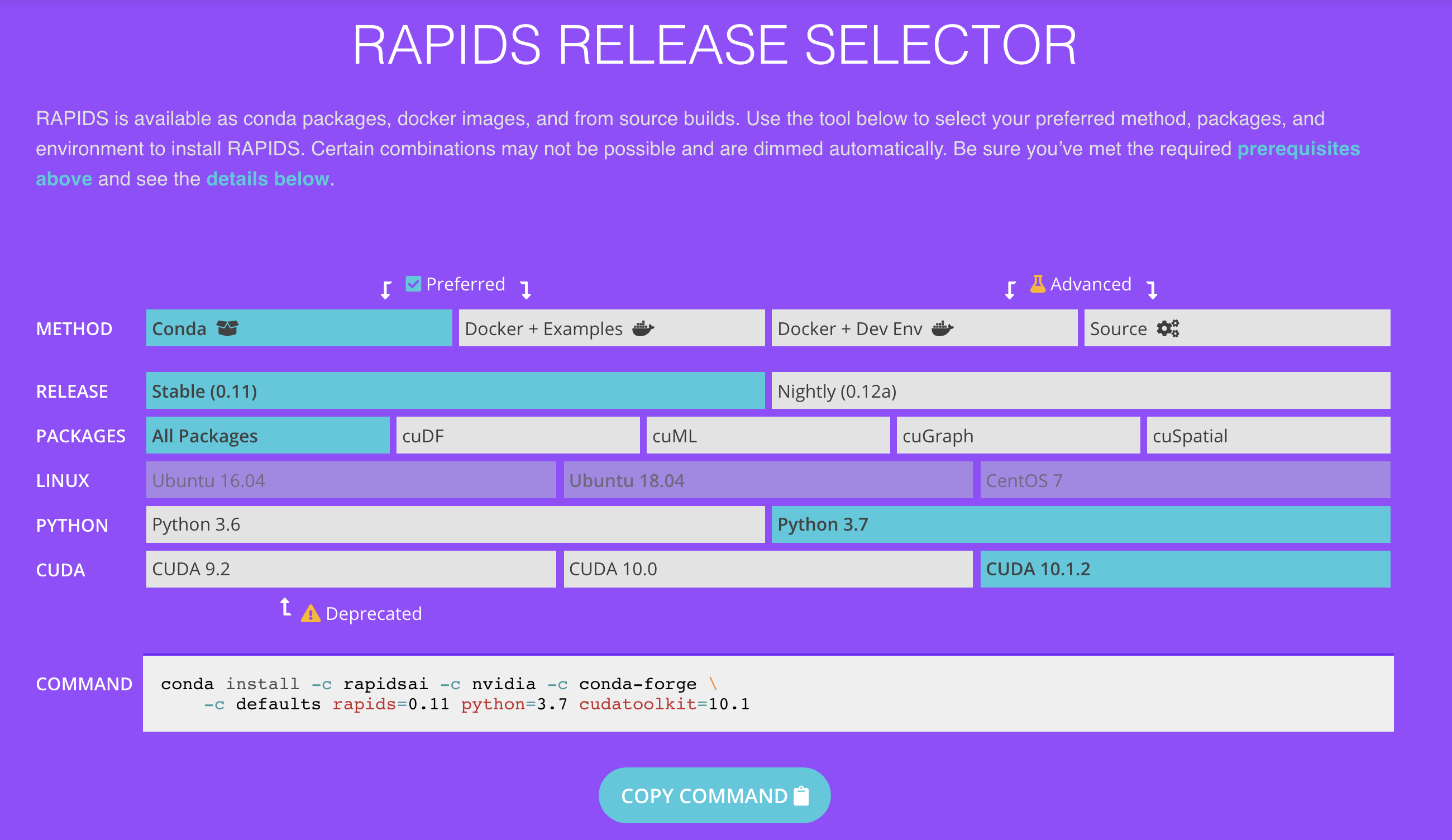

Python Pandas Tutorial – Beginner's Guide to GPU Accelerated DataFrames for Pandas Users | NVIDIA Technical Blog

Panda RGB GPU Backplate Custom Made for ANY Graphics Card Model now with Vent Cut Outs and ARGB (Addressable LEDs) - V1 Tech